Client project created for helping kids work through their emotions by using an Augmented Reality (AR) App in Partnership with The Look for the Good Project developed in Unity3D.

ROLE: Product Owner, Product Manager, Designer, Developer

TIMELINE: 8 Month Graduation Project

SKILLS: Unity3D Development, 3D Character Implementation

TOOLS: Unity3D, Maya, Figma, C#

This project is based on content from the partner organization The Look for the Good Project (LFTG), a non-profit organization that gives kids access to social-emotional learning programs. I partnered with one of the founders and integrated their insights in child development from their workbooks based on in-depth research from child psychologists and industry experts.

This app was created on a contract basis where I licensed my IP to The LFTG as a free app for the community.

Most AR apps on the market currently range from games, toys, animated filters, and very simple educational tools for younger children. There has not been much exploration into AR and social-emotional development with kids, which is why I want to use this medium to engage already digitally native kids in activities that demonstrate an alternate perspective to how they see their emotions.

The goal of this project was to create an augmented reality tool to helps kids work through their feelings through proven social-emotional reflective practices.

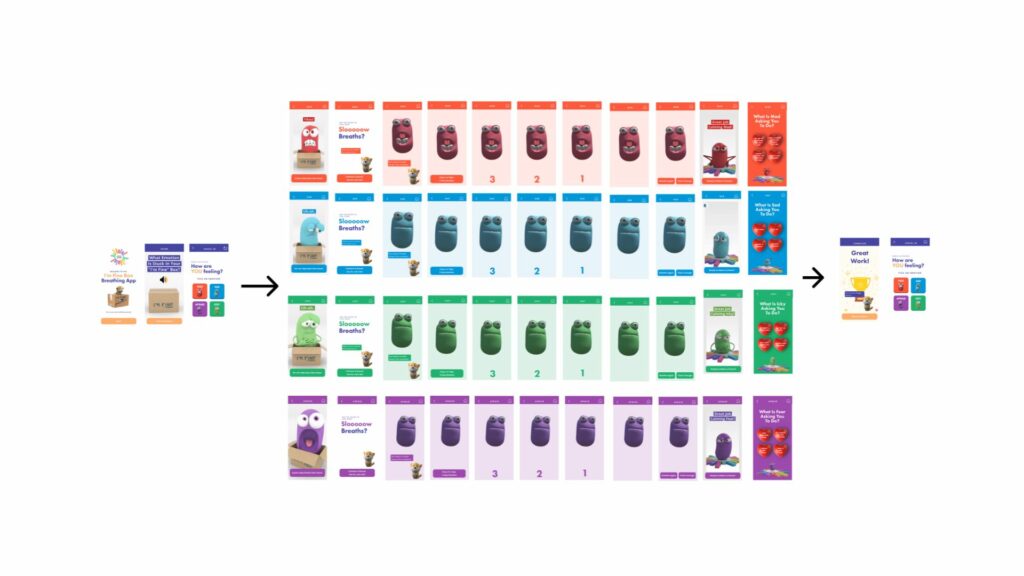

The app focuses on the use of 4 general emotions in the form of 3D characters that are used as a companion to guide the user to do breathing techniques based on how they’re feeling.

How can I create an experience that allows kids to engage and connect with their emotions in order to enhance their social-emotional understanding?

I designed all of the mockups and user flows in Figma.

I used the Unity platform as well as Maya to develop the final app. I worked with Unity3d to create the Interactions using c# scripts and Apple ARKit plugins to develop the AR Face tracking mechanics.

After several iterations I created an outline and flow of how the user will go through the activities.

I created features called “Blendshapes” in MAYA which allow for the 3d character to move a different part of the face (eyes up, down, side, etc..)

I imported the 3D characters in Unity and connected the “Blendshapes” to the face tracking mechanism used to make AR face tracking work (i.e: When your eyes move, the characters eyes move the same way)

I duplicated each of the characters and created the different colours that correspond to the different emotions to each of portion of the app.

I created the app for IOS only currently as the front facing and face tracking camera is only supported on IOS 12 and above with the Unity engines. I used Xcode to test and run the app as it was the best way to support the project. launched this project on test flight for preliminary beta users within the partner organization so that they are able to try the app out for themselves.